Biased AI outputs stay in the system because reporting them is harder than ignoring them.

Introducing SPOTLIGHT

This project is not affiliated with OpenAI or ChatGPT

Role

Lead Researcher

Timeline

August 2024 - December 2024

Skills

User Research & Interviews

Usability Testing

Storyboarding/Speed-Dating

Think-aloud Testing

Paper Prototyping

UX Design

Team

Project Manager

Lead Researcher

UX Designer

Visual/Graphic Designer

THE RESEARCH TLDR

SUMMARY

I led a team of four researchers through qualitative user research to understand why users weren't reporting bias in AI systems, uncovering that workflow friction - not lack of motivation - was the primary barrier.

METHODS USED

Usability testing, think-aloud testing, behavioral analysis, prototype validation

KEY METRICS

-

40% improvement in bias reporting participation rates

-

Reduced reporting flow from 7 steps to 3 actions

-

SUS score of 81.9 (above "good" usability threshold, originally 62)

MY ROLE & RESEARCH IMPACT ON DESIGN

Led end-to-end research strategy and execution. Identified critical user behavior patterns that contradicted initial assumptions, enabling the design team to pivot from disruptive reporting flows to seamless workflow integration. My research directly shaped product direction and validated a scalable solution for AI bias collection.

PERCEIVED IMPACT TO CHATGPT

Research shows that making bias reporting feel natural instead of like a chore could significantly increase user participation and improve AI training data.

DEFINING THE PROBLEM

THE GOAL

As AI systems become more integrated into our daily lives, the ability to report bias becomes increasingly crucial. I led a team of 4 researchers to understand why users weren't reporting bias in generative AI systems.

Perception

If users cared about bias, they would report it

Discovery

The system itself was creating barriers to reporting

FINDING A FOCUS

Reverse Assumptions Activity

Our team began by listing out common assumptions about reporting in GenAI systems. Then, we flipped each assumption to challenge our thinking and uncover new perspectives.

This reverse assumptions activity revealed an opportunity we hadn't considered: could autofill technology make the reporting process less cumbersome?

INITIAL FOCUS

How might we leverage autofill technology to create a frictionless bias reporting process in GenAI systems?

PROJECT SCOPE

We narrowed our focus to ChatGPT's interface and workflow, testing with users who regularly use the platform.

ASSESSING THE CURRENT SYSTEM

TESTING WITH USERS

Think-aloud Sessions with 12 AI-users

I chose think-aloud testing because I suspected users weren't consciously aware of their reporting barriers. Watching them interact with ChatGPT's current system revealed something unexpected - they were naturally highlighting problematic text but had no idea this could connect to bias reporting. This observation shifted our entire approach from 'how to motivate reporting' to 'how to connect existing behaviors to reporting.

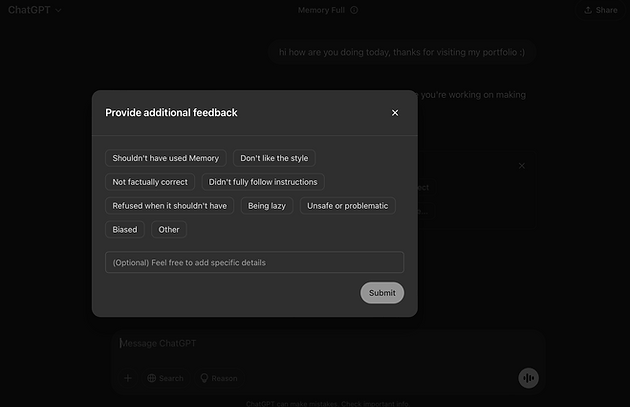

Most users see these as like/dislike buttons, missing their potential for reporting bias

Only after clicking thumbs down does the reporting option appear - a flow many users never discover

Critical bias reporting features hidden in a generic feedback flow

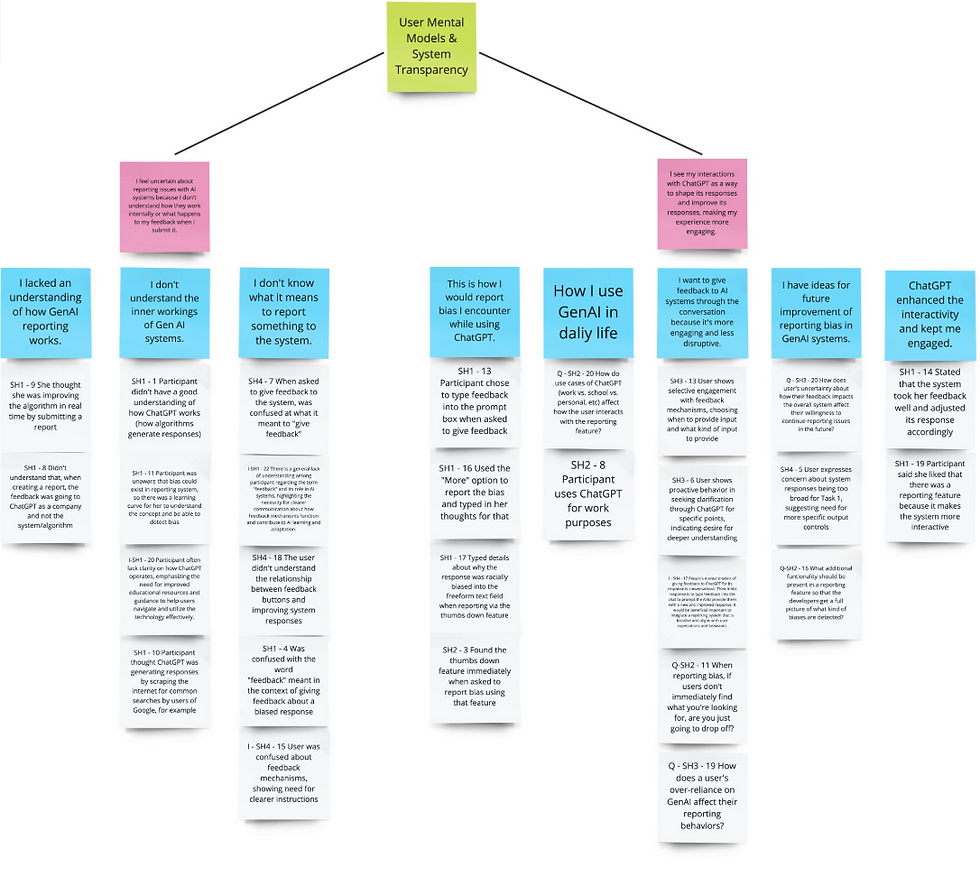

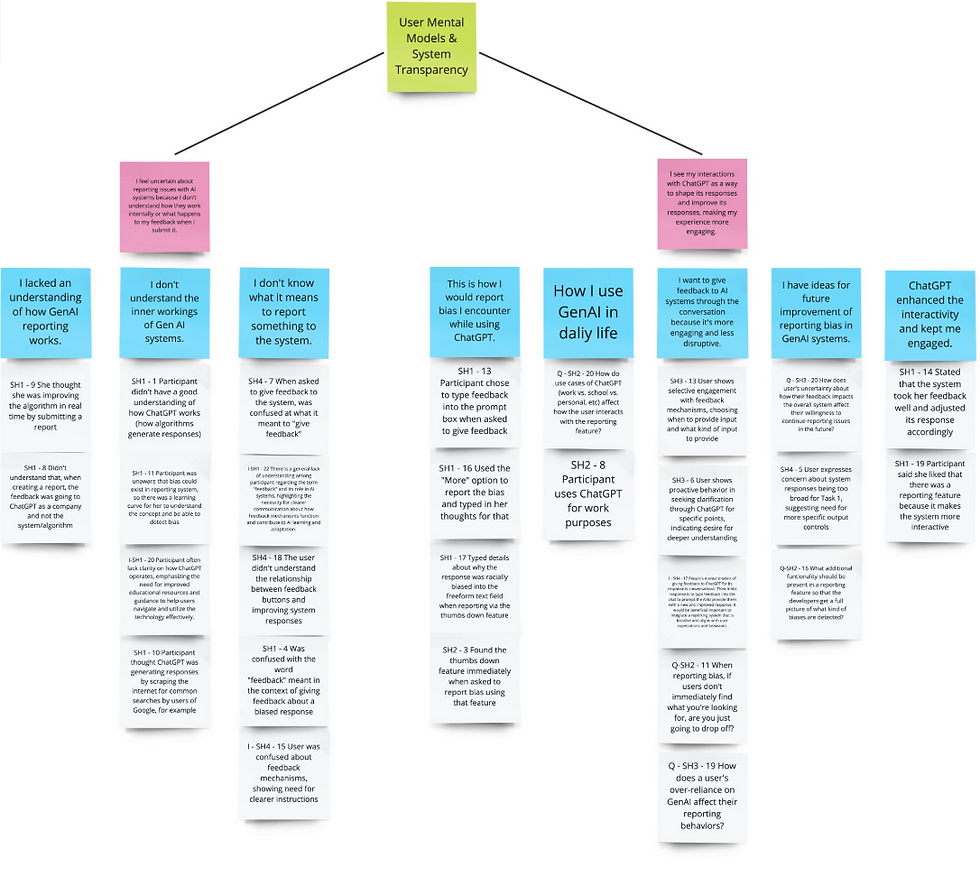

AFFINITY CLUSTERING

Unclear system processes

Difficulty recognizing bias

Lack of trust in the effectiveness of reporting

Affinity clustering revealed three barriers:

1

2

3

TASK FLOW DIAGRAM

Submitting a Bias Report

This task flow visualization showed the unnecessary complexity in the current reporting journey and the key drop-off points where users abandoned the process.

CONCEPT EXPLORATION

RAPID IDEATION

Crazy 8s Activity

We conducted a Crazy 8s exercise to rapidly generate solutions emerging from our research.

RAPID IDEATION

Storyboarding

From our crazy 8s exploration, we identified four essential user needs. To visualize potential solutions, we created storyboards for each need - exploring safe, risky, and riskiest approaches.

1

To be able to report bias in a way that does not interrupt their workflow.

2

Users need to understand how Chat GPT generates its responses.

3

Users need to be engaged for reporting bias.

4

Users need to know where and how to submit a report when using ChatGPT.

Example of 'riskiest approach' for need #4

DESIGN OPPORTUNITIES

User Testing

The storyboard testing uncovered potential areas for solution development.

Reduce Cognitive Load

Cut reporting friction by auto-populating forms and nudging users when bias appears

Increase Social Proof

Make reporting feel valuable by showing how it contributes to better AI for all users

Integrate into Workflow

Let users flag issues through conversation instead of hunting for separate reporting buttons

Make Reporting Flexible

Support both quick flags and detailed reports to match users' varying time and energy levels

REFINED FOCUS

How might we seamlessly integrate reporting into the user's workflow, enabling them to report bias quickly, efficiently, and with a clear understanding of how their input will impact the system?

USABILITY TESTING

CREATING AN ARTIFACT

Paper Prototyping

We built a paper prototype to test our ideas in the real world. This approach let us see how users actually interacted with our reporting flow before committing to high-fidelity designs.

DESIGN BREAKTHROUGH

Text Highlighting → Contextual Reporting

1

2

3

We observed users naturally highlighting problematic text in ChatGPT responses. Instead of creating a separate reporting flow, we attached reporting options directly to this existing behavior.

When users highlight text, a small contextual menu appears with bias reporting options - turning an instinctive action into a reporting opportunity.

This approach eliminated the awkward "switching tasks" feeling that users complained about with traditional reporting systems.

VALIDATION THROUGH TESTING

Our paper prototype testing had very positive feedback, with users quickly adopting the highlighting-to-report interaction pattern.

The prototype received an SUS score of 81.9 (above the 68 threshold for "good" usability), reflecting the intuitive nature of the design.

"Nice, it's super quick. Not having to stop everything I'm doing and go somewhere else."

"I'd totally find this by accident just highlighting stuff. Like, it's right there where you're already working."

THE SOLUTION

Spotlight: A text-highlighting system for seamless bias reporting in ChatGPT.

My Research Diary

Taking a research-first approach uncovered opportunities a design-only process might have missed. By studying how users actually behave, rather than jumping to interface solutions, we discovered how to integrate into their natural workflow.

REFLECTING

WHAT I LEARNED

This project challenged my assumptions about user behavior and research methodology. What began as a straightforward bias reporting task evolved into a deeper exploration of how people naturally interact with AI.

My role as a researcher has expanded beyond just gathering data. I've learned to synthesize seemingly unrelated observations into meaningful patterns that drive design decisions.

I learned to trust unconventional research methods. The reverse assumptions activity felt uncomfortable at first, but taught me that disrupting my own thinking often leads to breakthrough insights.

I now feel confident challenging team assumptions with evidence rather than opinions. This project helped me find my voice as a researcher and advocate for what users actually need rather than what we think they want.