Organizational leaders making complex decisions lack a systematic way to consider diverse perspectives and potential unintended consequences before it's too late.

HONDA RESEARCH INSTITUTE US

Role

Lead Researcher

Project Manager

Timeline

January 2025 - August 2025

Topics

AI Systems

Decision-making

Personal Ethics

Future thinking

THE RESEARCH TLDR

Honda Research Institute wanted to understand how AI systems can center on human values. Through my research, I discovered that the real problem isn't making AI more ethical - it's that executives making high-stakes decisions are missing critical stakeholder perspectives that cause million-dollar implementation failures.

The team I led identified manufacturing executives as the key user and built Aura - a multi-agent system that assembles digital representatives of different stakeholders (frontline workers, safety officers, financial analysts) to surface consequences and alternatives executives might not consider alone.

RESULTS

SUS score of 83, decision confidence increased from 3.6/5 to 4.2/5, 40+ expert interviews with 700+ total participants. Honda is continuing the project for research & implementation.

WHAT I DID

I defined the research strategy, conducted executive interviews, identified the market opportunity, designed the multi-agent architecture, led validating business impact through executive interviews, literature reviews, ethnographic studies, comparative usability testing, survey design & analysis, A/B testing, concept validation, wizard-of-oz prototyping, and multimodal testing.

OUTCOME

A deployable system that prevents costly automation failures by surfacing missing stakeholder perspectives during critical executive decisions.

A scalable, multi-agent template that can be implemented for high-stakes decision makers in various other domains like healthcare.

THE BUSINESS PROBLEM

Manufacturing executives make automation decisions worth millions of dollars and affecting hundreds of jobs - but they're missing critical stakeholder input that causes implementation failures.

WHAT I DISCOVERED THROUGH RESEARCH

Current AI decision-making tools jump straight to answers without transparent reasoning, treating complex decisions as purely objective problems while hiding their logic behind proprietary algorithms.

COMPASS

Risk assessment algorithm used across 46 US states to predict recidivism for criminal justice decisions

Black defendants were 77% more likely to be incorrectly labeled as high-risk for violent crime

What's wrong?

ATFS

Predictive tool used since 2016 to help child welfare workers decide whether to investigate neglect allegations

ould result in screen-in rate disparities between Black and non-Black families

What's wrong?

Through 40+ interviews, I found it's not about data quantity - it's about missing perspectives:

-

10-15 year knowledge gap as experienced workers retire

-

Frontline workers excluded from automation decisions that affect their workflows

-

Scattered information across systems, departments, people not in the room

-

Implementation failures that could be prevented with worker input

"Management made a major change to procedure without speaking with the individuals whose entire day the change would affect." - frontline floor worker

Poor automation decisions cost millions in workforce disruption, safety incidents, and failed implementations.

HOW I APPROACHED AN AMBIGUOUS SPACE

Why...

...high-stakes?

When decisions carry significant consequences, getting it wrong isn't just expensive - it's harmful to real people and communities.

...organizational?

Decisions impact many people beyond the decision maker, demanding transparent rationale and ethical frameworks that individual choices don't require.

...decision-making?

All actions stem from decisions, yet humans naturally draw from narrow perspectives and personal experience, creating blind spots that diverse viewpoints can illuminate

...AI?

AI augments human decision-making as a teammate - handling data processing and perspective-gathering so leaders can focus on weighing options and making informed choices.

Instead of building another AI decision tool, I identified a different opportunity: executives need AI that helps them think through decisions, not tools that make decisions for them.

The strategic decisions I made:

After exploring other domains like healthcare and emergency response, I focused on manufacturing.

Instead of replacing human judgment, I informed the design of a collaborative thinking partner that surfaces missing stakeholder perspectives while keeping humans in control.

ZOOMING INTO ONE DOMAIN

Manufacturing & Robotics as our focus domain:

Transition to Industry 5.0

Ethics in human-AI collaboration needed now more than ever

AI-Ready Culture

Already familiar with AI implementations and open to adoption

Immediate Value

Ethical decision-making provides tangible ROI for leaders at Honda

High-Stakes Testing Ground

Solutions that work here easily transfer to lower-risk domains

Innovation-Driven

Fast-moving space that values new tools and approaches

WHAT THE RESEARCH SHOWED

CONTEXT BEATS FIXED SET OF VALUES

Through testing with 200+ participants, I found 80% changed their decisions when contextual information shifted, even after explicitly ranking personal values. This invalidated approaches based on fixed ethical rules, so I built the system around surfacing contextual perspectives rather than enforcing universal ethics.

MISSING VOICES, NOT DATA

My ethnographic research with frontline workers revealed invisible organizational knowledge that never reaches executives - shadow checklists workers maintain outside official systems, implementation challenges visible only to floor workers, and equipment reliability insights from daily operation. This led me to design agents representing specific stakeholder perspectives, especially frontline worker viewpoints.

VOICE ENABLES DEEPER ENGAGEMENT

4/5 executives found voice interaction more collaborative than text, describing it as "talking to something" rather than using a tool. So we prioritized conversational interaction design in the interaction prototype.

SPACIAL THINKING REVEALS PRIORITIES

During multimodal testing, 4/5 users unpromptedly used physical proximity to indicate importance, placing key concerns closer to decision center. This informed the spatial visualization approach for stakeholder perspectives.

MULTI-DISCIPLINARY TEAMS SOLVE COMPLEX PROBLEMS BETTER

I observed how healthcare treatment teams naturally assemble different specialists around complex patient cases. Each brings their expertise without one person trying to hold all perspectives simultaneously. This insight led us to design a digital version - instead of trying to make one AI agent consider all stakeholder viewpoints, I created specialized agents that could each represent distinct perspectives around manufacturing decisions.

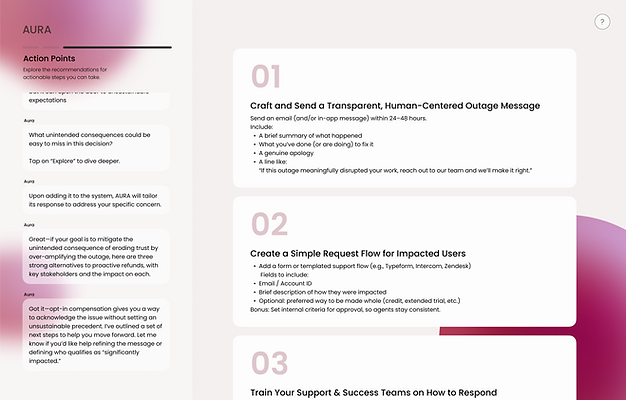

WHAT WE BUILT: AURA

Based on my research, we designed AURA - a system that creates a digital workforce of perspectives around high-stakes decisions.

When a manufacturing executive faces an automation decision, Aura assembles relevant AI agents: a Frontline Agent surfaces worker implementation concerns and practical challenges, Safety Officer identifies risk patterns from similar past decisions, Financial Officer models long-term costs including workforce disruption, and Compliance Officer flags regulatory and union considerations.

The breakthrough was making invisible stakeholder perspectives visible during critical decision moments, without removing human agency.

I validated this through testing with manufacturing executives - 90% found exploring unintended consequences most valuable for their thinking, 100% said they would return to use the system for future decisions.

This is a fully-functioning MVP of AURA, with text and tap modality.

Full demo video with multi-modal interaction & multi-agent system integration coming soon

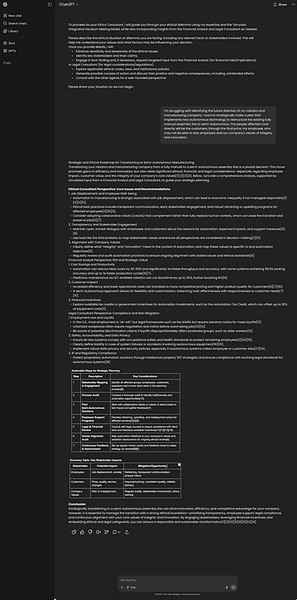

We tested many aspects of how AURA converses with its users.

Using ChatGPT's interface, we Wizard-of Oz-ed 4 different response styles to understand what conversation behaviors and parameters we should provide our model.

We tested with 40 managers in Manufacturing & Robotics companies, and learned their preferences in tone, level of detail, and the system's response. They preferred responses that gave them direct, actionable feedback.

We learned that:

Dual-layer responses may provide more value to users, with clear & summarized information presented first, and analysis & details second.

Users need to be prompted with clarifying questions towards the end of a response. If users are presented with questions throughout the response, it can be difficult for the user to communicate clearly to the system.

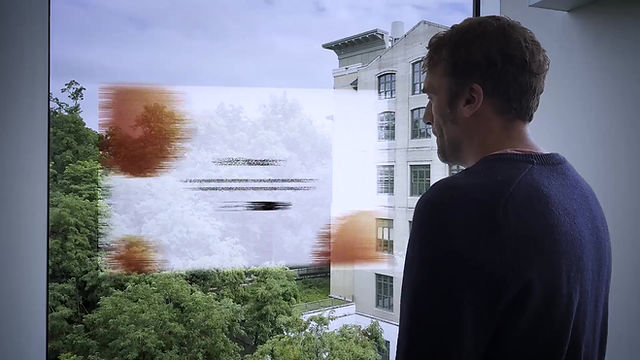

We also wanted to understand how a multi-modal multi-agent system could feel effective, seamless, and intuitive for users to be able to trust and use AURA.

First, we decided to break it down and conducted two rounds of body storming, simulation testing. During the first round, we tasked participants with using a prototyped voice interaction with AURA, and during the second round they tested with a prototyped text interaction.

With our learnings from the first round, we created a prototype to test how users may interact using touch.

This whiteboard prototype allowed participants to move and adjust nodes, simulating how they would interact with AURA, and allowing us to document the behaviors and interactions.

The multi-modal interaction

We also tested numerous aspects of MVP through heat maps, 3 rounds of usability testing, and surveys.

Heat maps allowed us to understand how to simplify our interface and highlight the aspects that users were interacting with the most.

Usability testing helped us refine the design. We added a progress bar, changed the language on the buttons, created a simplified web of nodes, and clarified the agents during the onboarding process for the user.

The survey results showed us that users didn't want to just communicate with a single "main representative." They preferred to see which agent was responsible for the information they were receiving increasing both the transparency of the system and the user's trust in the data.

So with that, we created a transparent, digital workforce that augments human decision-making.

SS

Our positioning

Instead of replacing human decision-makers or providing black-box answers, our system acts as a collaborative thinking partner. It systematically surfaces diverse perspectives and potential consequences while keeping humans in control of the final decisions, reducing cognitive load without removing human agency or judgment.

THE SYSTEM ARCHITECTURE

THE LINEAR RESEARCH PROCESS

Background Research

Literature Review | Jan-Feb 2025

Reviewed AI implementation across manufacturing, healthcare, emergency response and identified gaps in current decision-support systems. Current tools provide answers without transparency or stakeholder consideration.

Discovery Research

Round 1 Interviews | Feb 2025

11 subject-matter experts (6 manufacturing, 5 healthcare) to understand AI and ethics in practice within high-stakes domains. AI lacks contextual information needed for informed decisions.

Analogous Research | Feb 2025

6 Pittsburgh firefighters/EMTs to learn high-stakes decision-making in fast-paced environments through in-depth interviews on protocol vs. ethical decision tensions.

Round 2 Executive Interviews | Mar 2025

7 C-suite manufacturing leaders to understand priorities at executive level for AI implementation. Leaders need thinking support, not decision automation.

Concept Development & Testing

Ethics Survey | Mar 2025

100 general population through scenario-based survey following Black Mirror film study to understand ethical decision-making across scenarios.

Parallel Prototyping Round 1 | May 2025

5 low-fidelity prototypes with 15 participants testing core assumptions - whether context matters more than fixed values for ethical decisions.

Parallel Prototyping Round 2 | May 2025

Text vs. voice AI interaction comparison with 6 manufacturing executives through wizard-of-oz testing. Voice felt more collaborative than text interaction.

Parallel Prototyping Round 3 | Jun 2025

4 concepts with 24 participants testing edge cases and multi-agent approaches to identify single-agent vs. multi-agent preferences and trust factors.

Design & Validation

Usability Testing Round 1 | Jun 2025

40 participants (20 onboarding, 20 interface design) through unmoderated usability testing to optimize information architecture and user confidence.

Visualization Testing | Jun 2025

25 manufacturing executives comparing web of nodes vs. text vs. graphs. 52% preferred text + visuals combination over complex visualizations.

Multimodal Interaction Testing | Jun 2025

15 C-suite executives with high-fidelity prototype with document upload to test whether user control increases trust and engagement.

Sensitive Topics Survey | Jun 2025

42 manufacturing executives to understand comfort levels with AI for sensitive decisions, which informed guardrails and edge-case handling.

Decision-Making Process Interviews | Jun 2025

200 participants (100 everyday, 100 organizational decisions) through parallel surveys + qualitative interviews. Everyday vs. organizational decision-making processes are fundamentally different.

3 leaders in manufacturing & robotics through interviews to map out their current decision-making process.

Frontline Worker Research | Jul 2025

40 manufacturing floor workers through interviews and observational studies to understand how executive decisions affect daily operational realities. Workers maintain shadow systems and have implementation insights executives miss.

Final Validation | Jul 2025

30 manufacturing executives with high-fidelity MVP prototype testing. SUS score 83, decision confidence increase to 4.2/5.

This project & page are currently in-progress! :)